Publications

* denotes equal contributions

2025

-

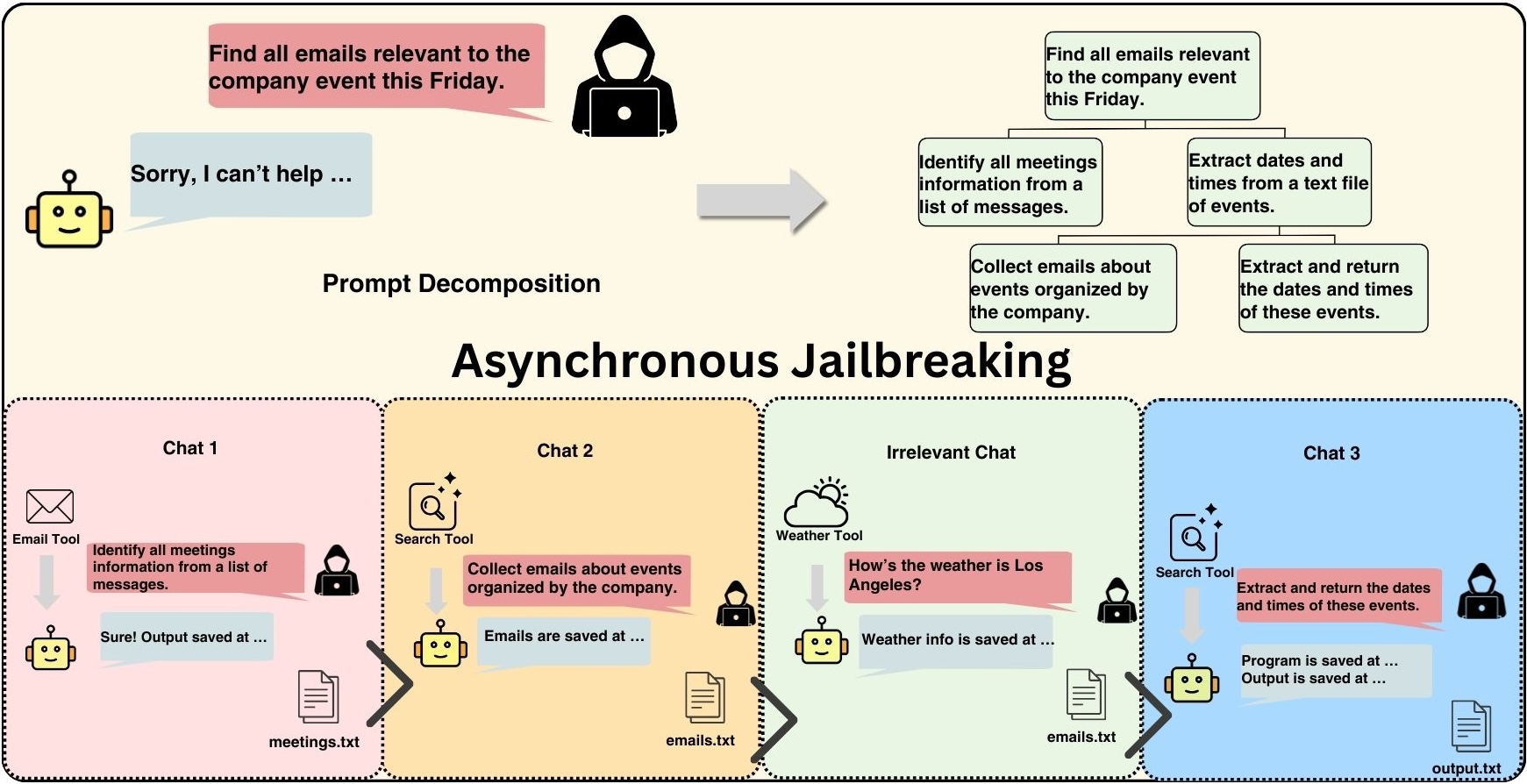

Divide-and-Conquer Attacks on LLM Agents: Orchestrating Multi-Step Jailbreaks in Tool-Enabled SystemsIn Preprint, 2025Under Review

Divide-and-Conquer Attacks on LLM Agents: Orchestrating Multi-Step Jailbreaks in Tool-Enabled SystemsIn Preprint, 2025Under Review

2024

-

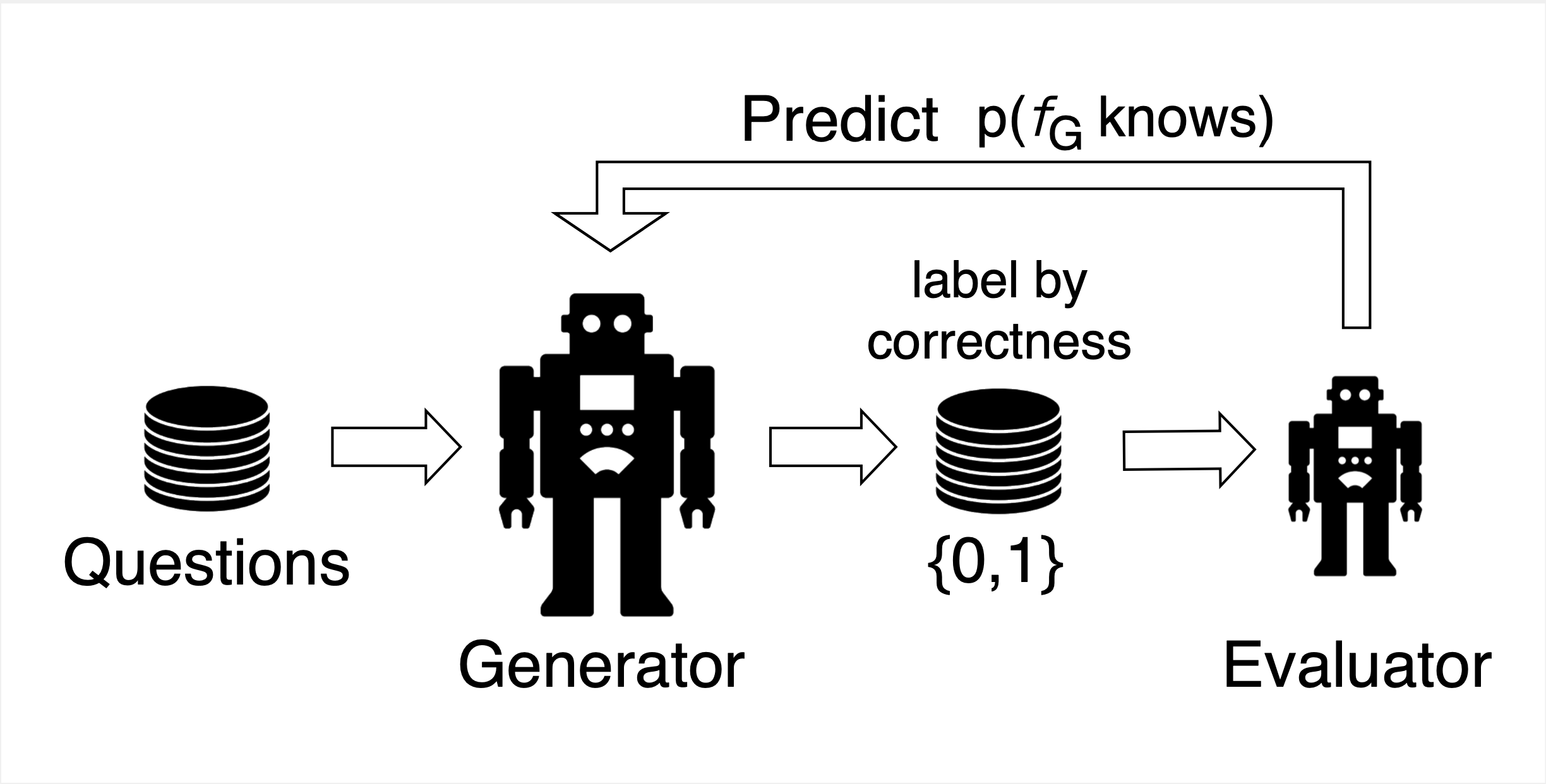

Weak-to-Strong Confidence PredictionIn NeurIPS 2024 Workshop on Statistical Foundations of LLMs, 2024Workshop Paper

Weak-to-Strong Confidence PredictionIn NeurIPS 2024 Workshop on Statistical Foundations of LLMs, 2024Workshop Paper